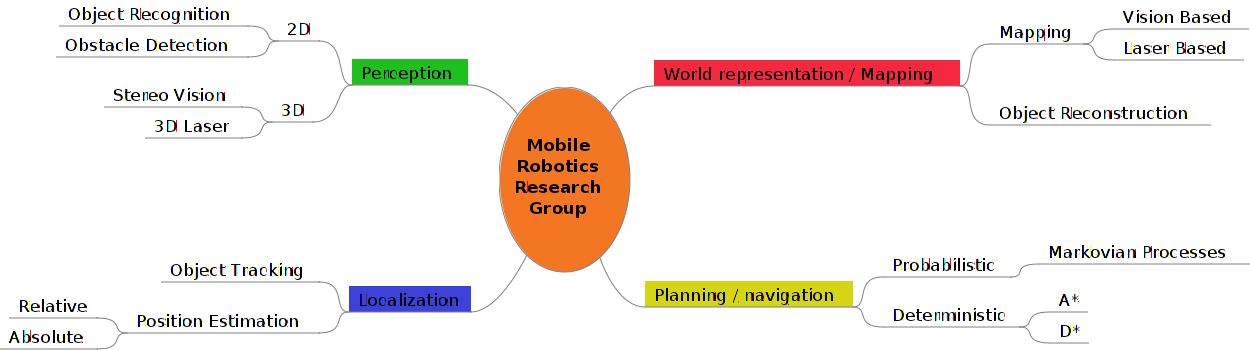

Visual Odometer System to Build Feature Based Maps

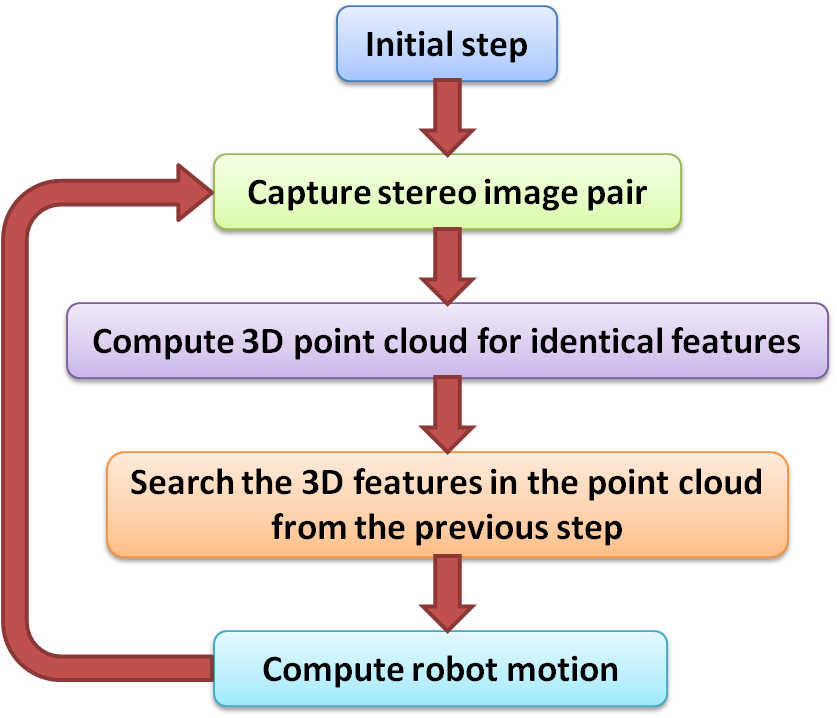

In a visual odometer system is addressed the problem of computing the displacement of a mobile robot by analyzing the consecutive stereo image pares taken during its motion. The developed algorithm (diagram in Fig. 1) measures the relative translation and rotation of the vehicle related to the surrounding environment represented by the 3D image features obtained from the stereo pairs. In the video file the robot position computed with the visual odometer system are marked with red dots on lover left corner (on an aerial perspective of the scene).

|

|

| Fig. 1. Diagram of the visual odometer

algorithm |

Video with Results |

A. Majdik; L. Tamas; M. Popa; I. Szöke; Gh. Lazea, Visual Odometer System to Build Feature Based Maps for Mobile Robot Navigation, 18th Mediterranean Conference on Control and Automation, ISBN: 978-1-4244-8090-6, pp.: 1200-1205, Marrakech, Morocco, 2010

Solving the Kidnapped Robot Problem

|

|

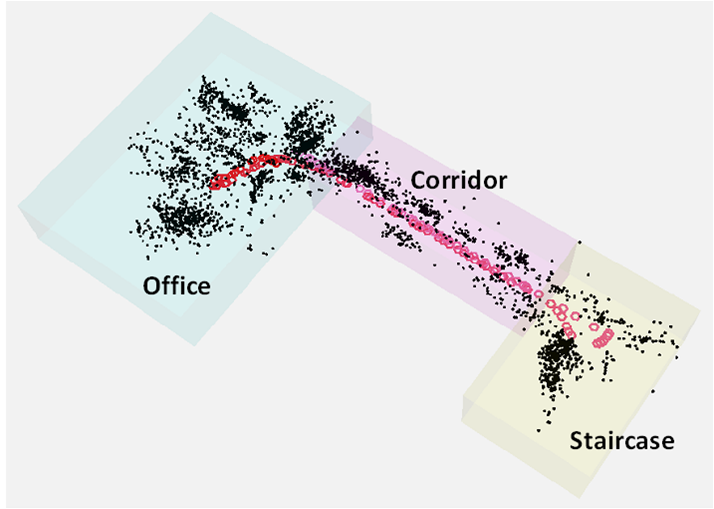

| Fig. 1. Selected images captured by the mobile platform during the mapping process | Fig. 2. Generated map, containing the 3D image features |

|

|

| Fig. 3. Images taken by the mobile robot

to localize itself online |

Fig. 4. The robot automatically realizes

that he is at the end of the corridor (aerial view) |

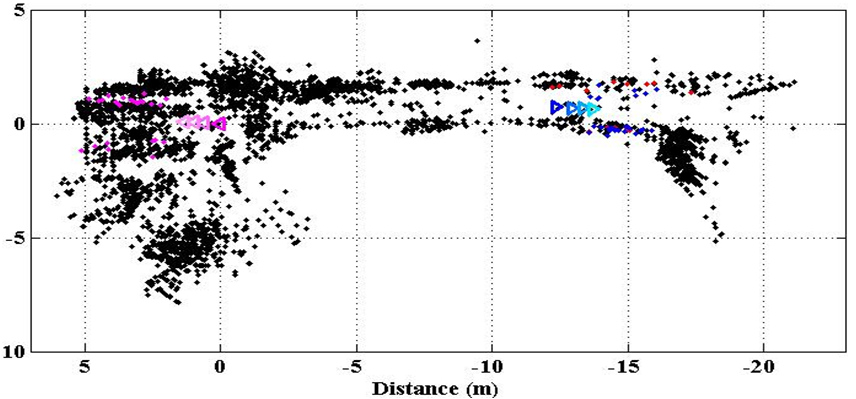

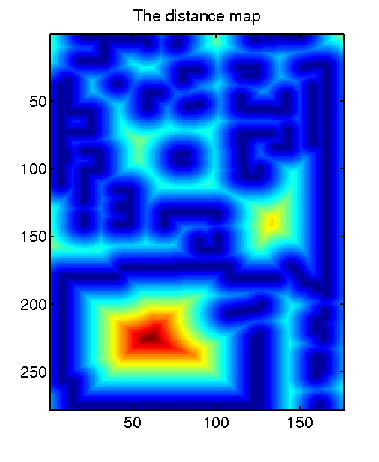

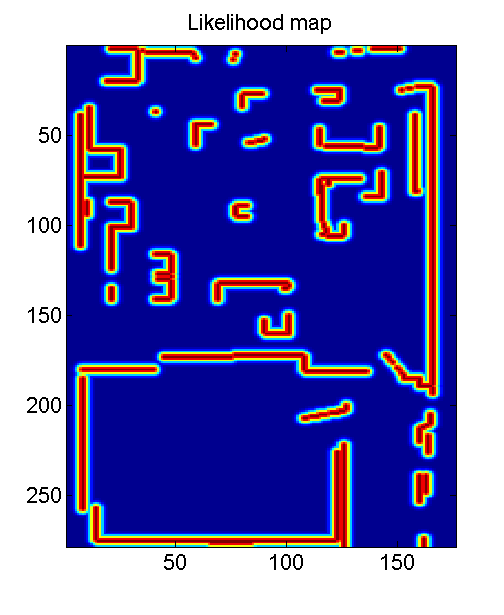

Laser Based 2D Mapping & Localization

Laser based 2D mapping can be useful for indoor environment representation. ICP based mapping can be used later on for proving grid, distance or probabilistic mapping.

|

|

|

|

| Fig. 1. Distance map |

Fig. 2. Likelihood map |

Fig. 3. Laser measurement projected on the map | Fig. 4. Likelihood map of the position

of the robot with the laser measurement |

L. Tamas: Sensor Fusion Based Position Estimation for Mobile Robots, PhD thesis, 2009.

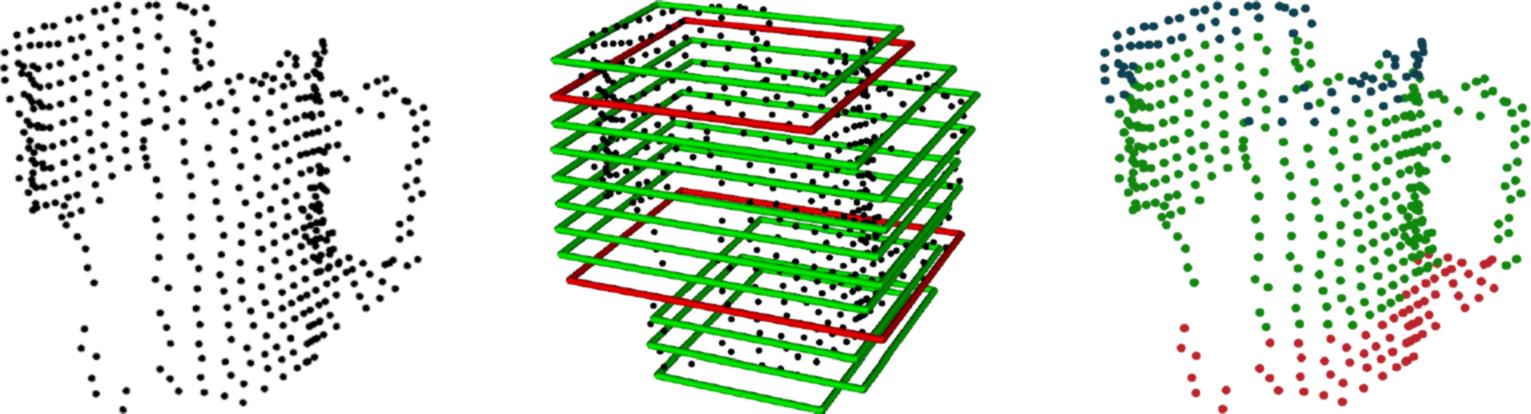

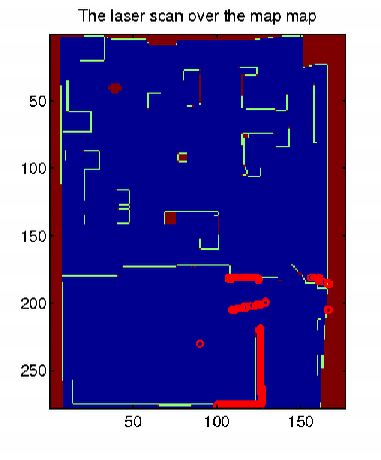

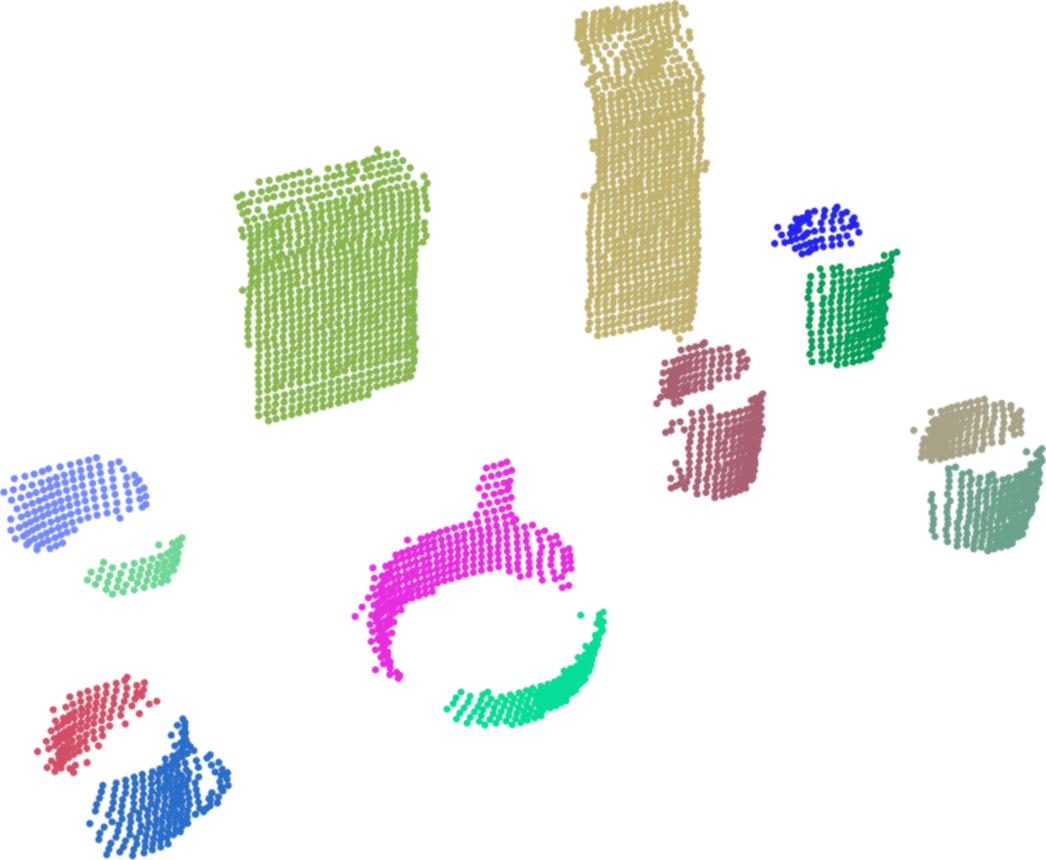

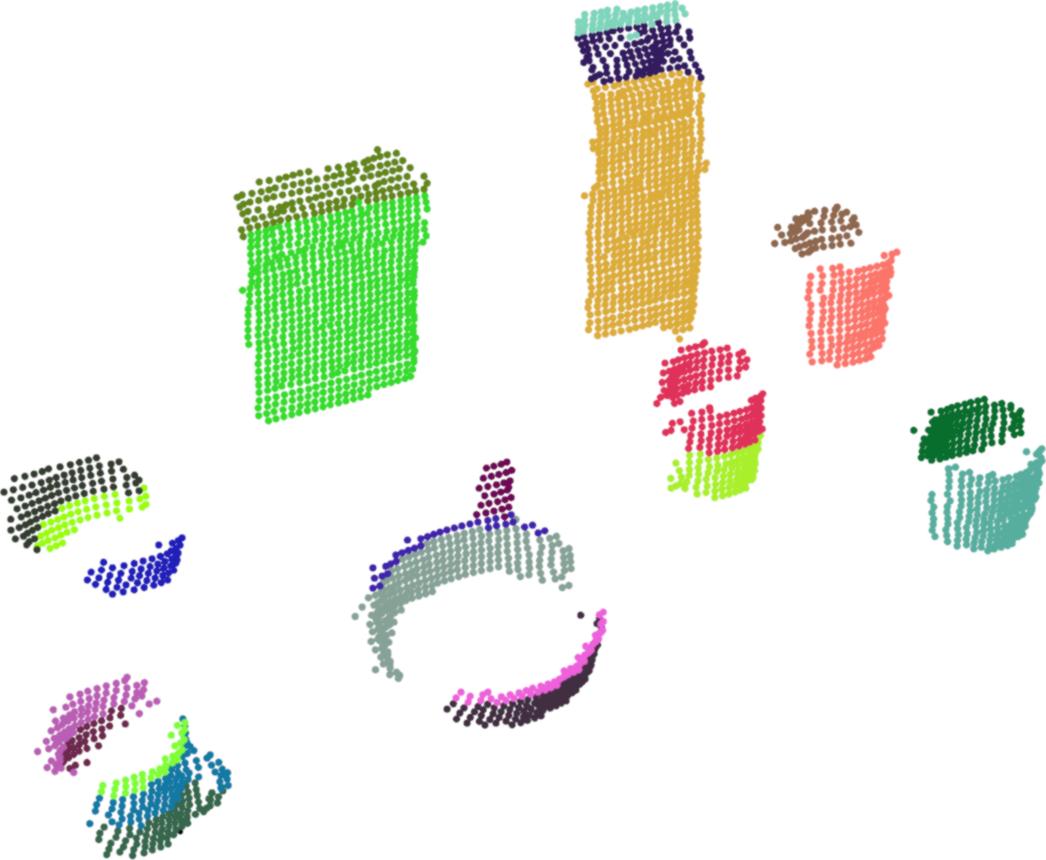

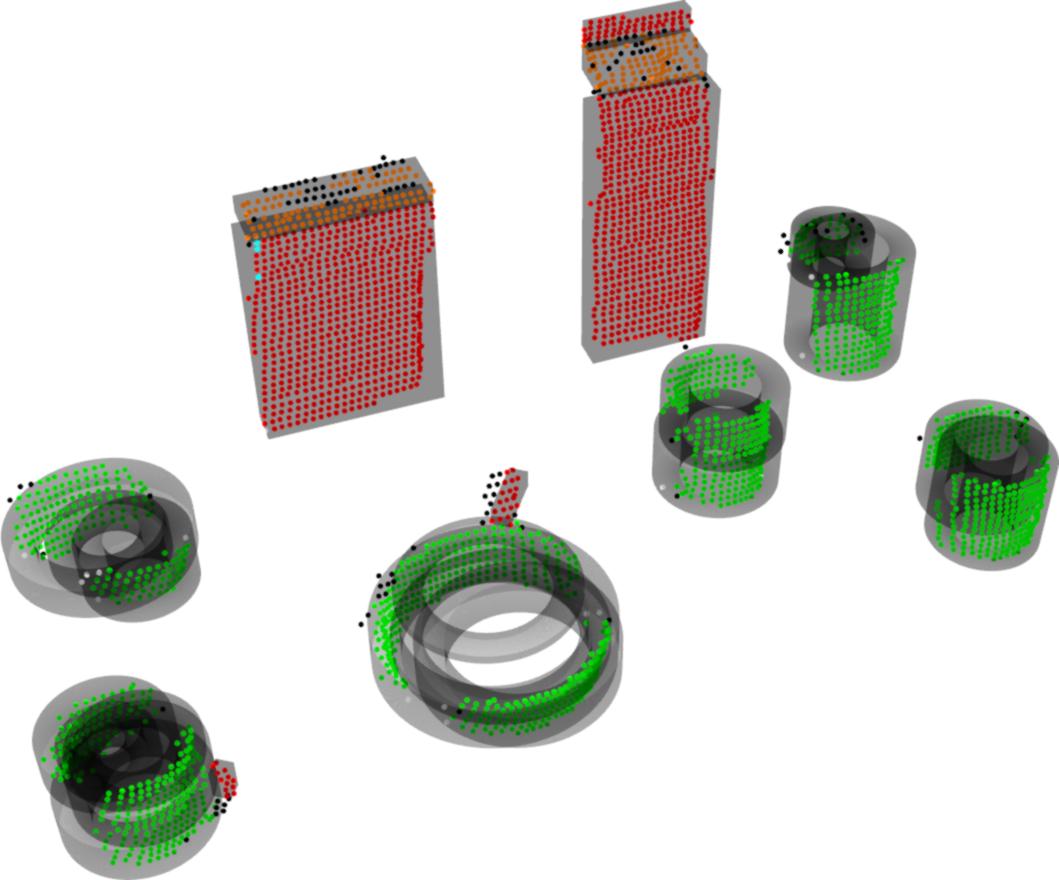

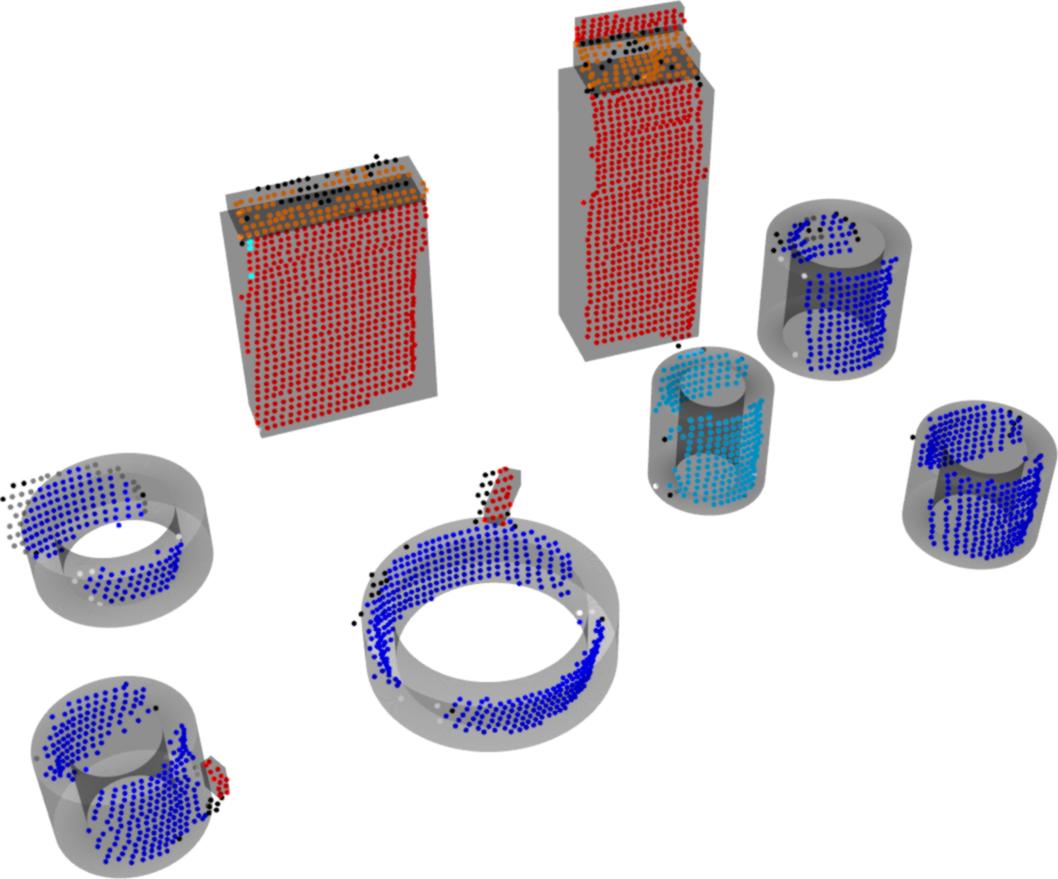

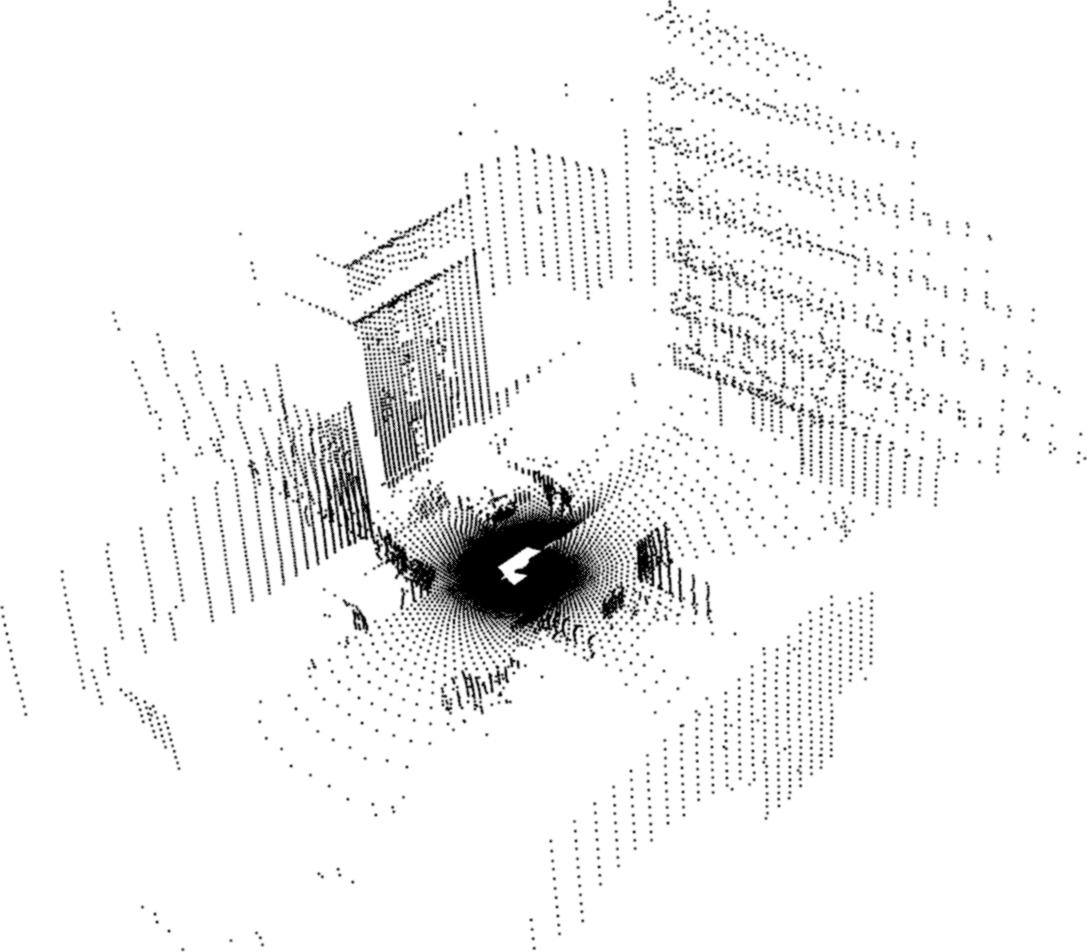

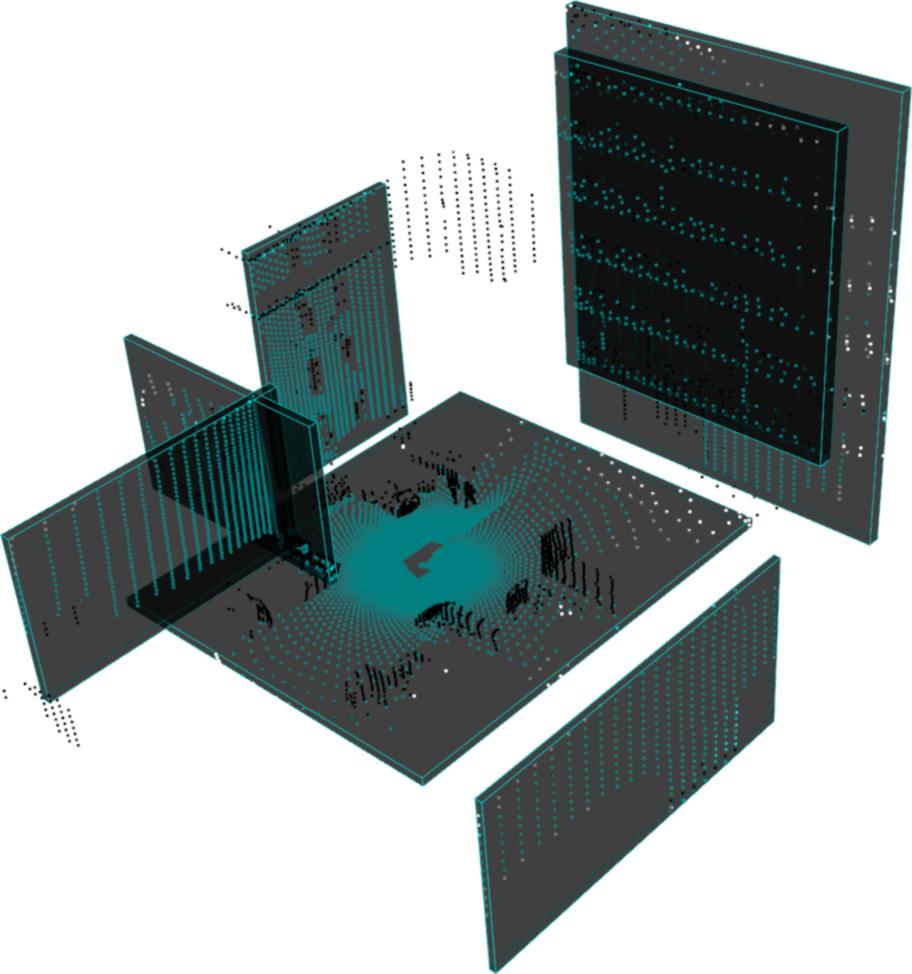

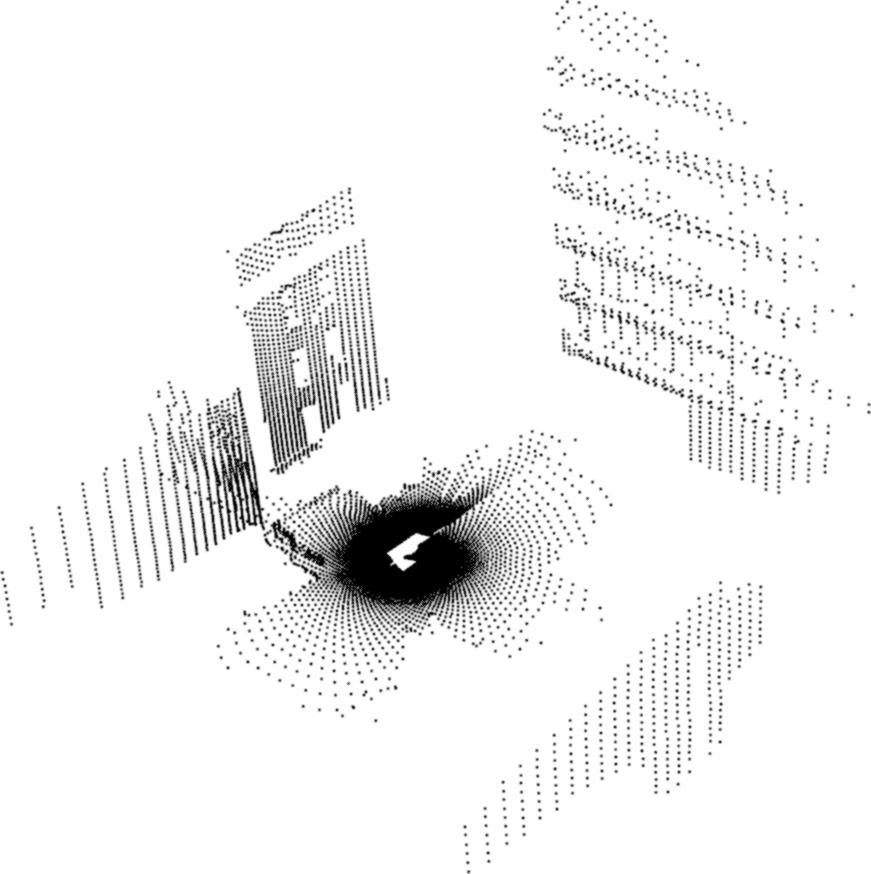

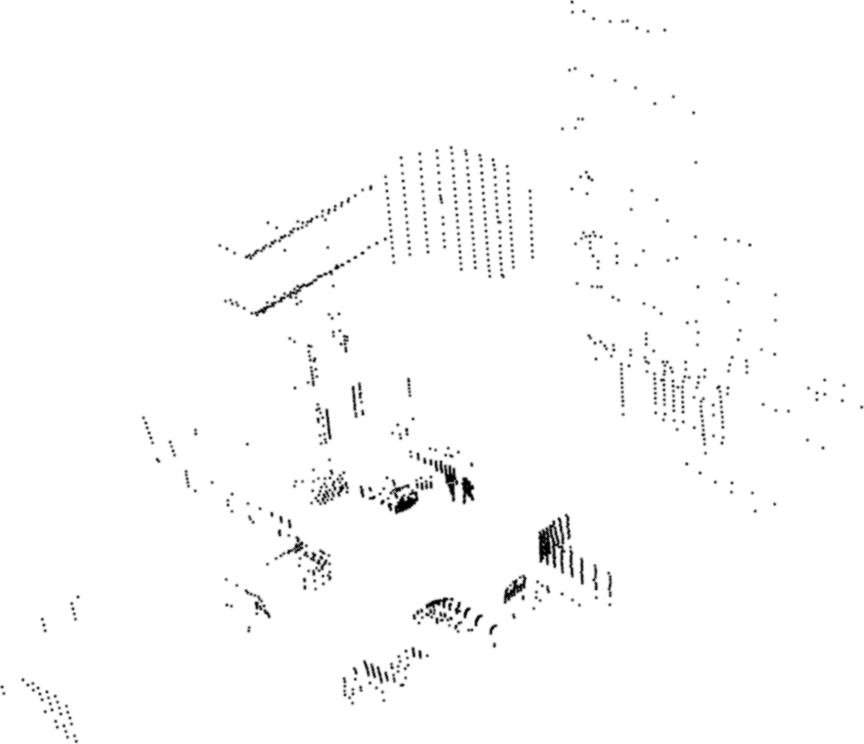

Keywords: Segmentation, Layering, Fitting, Correction, Reconstruction, Verification

Description:

• RANdom SAmple Consensus (RANSAC) and Hough Transform (HT)

• Region Growing Method for Clustering Objects

• Identifying Boundary Points of Regions’ Footprints

• Quadrilateral Approximation Technique (QAT) for Clusters using Principle Component Analysis (PCA)

• Automatic Decomposition of Clusters into Layers for Improved Reconstruction

• Hierarchical Model Fitting and Validation

• Methods for Dealing with Over-and Under-Segmentation Problems by Merging and Splitting of Models

• Identifying Occluded Parts and Inconsistencies of Models Objectives

• 3D Reconstruction and Verification of Object Models using Automatic Layering

• Calibration and Fusion of 3D and Image Data for Initial Processing

• Feature Extraction and Classification for Object Recognition

Screenshots:

|

|

| Fig. 1. Analyzing the 2D Footprints of Objects | Fig. 2. Automatic Layering of 3D Objects |

|

|

| Fig. 3. Solving the Over-segmentation

Problem by Merging of Models |

Fig. 4. Overcoming the

Under-segmentation Problem by Splitting of Models |

|

|

|

|

|

|

|

|

|

|

|

Automatic Layered 3D Reconstruction and Verification of Object Models for Grasping |

Overcoming the Under-segmentation Problem |

Furniture

Detection

Furniture

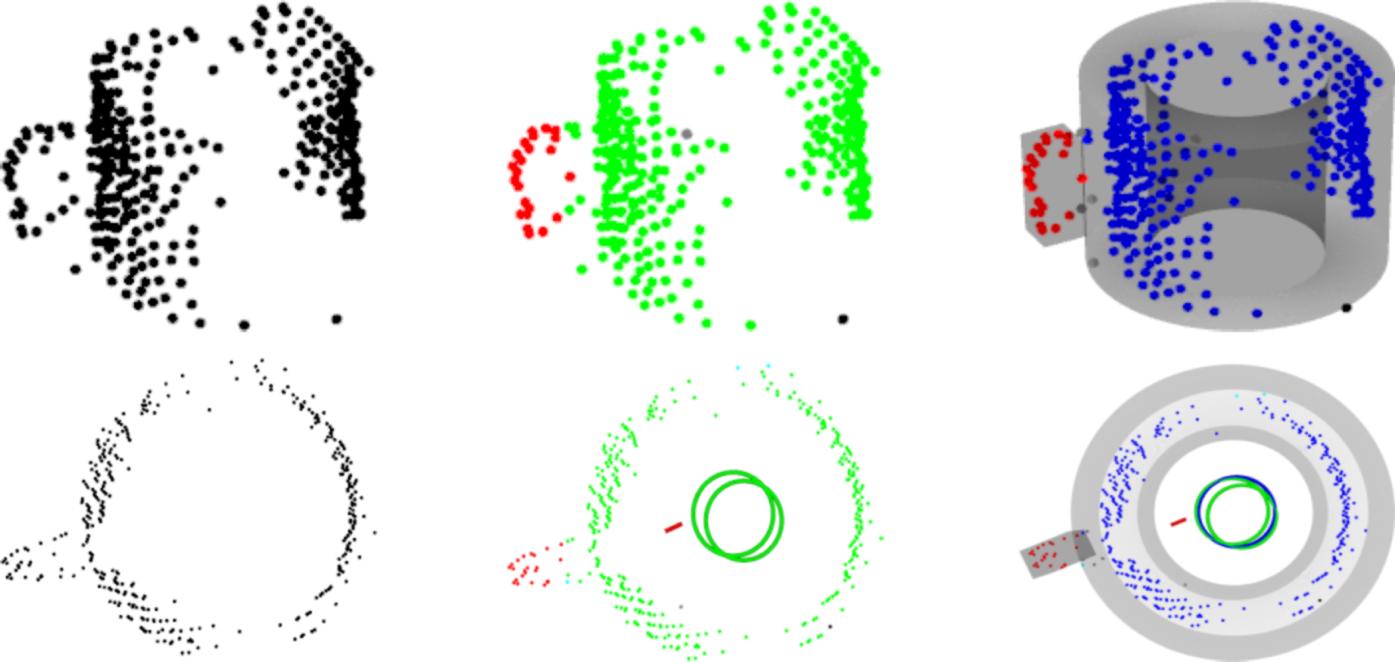

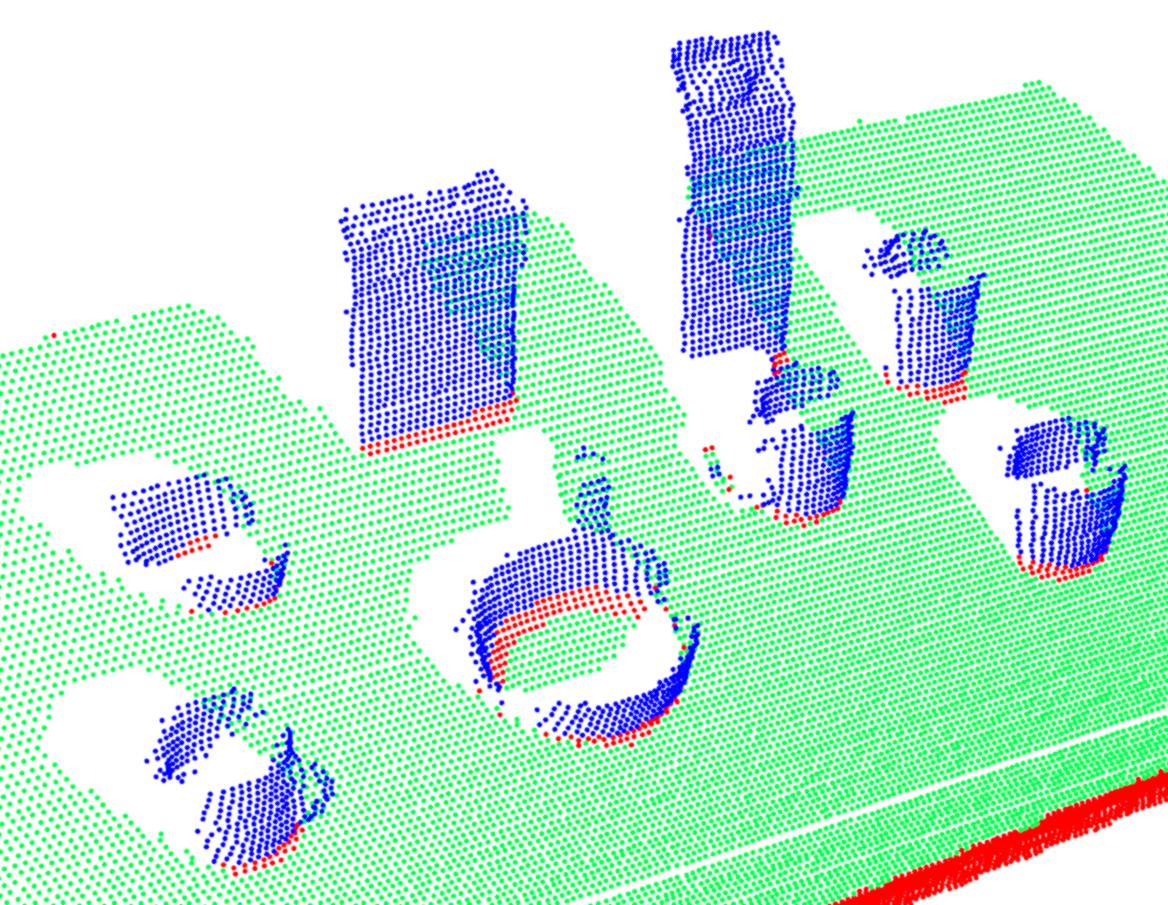

DetectionKeywords: Fitting, Detection, Segmentation, Furniture, Fixtures

Description:

• Segmentation of planar surfaces and furniture fixtures from real-world point cloud data-sets.

• Based on RANSAC algorithm with axes-aligned fitting of planes.

• Using Robotic Operating System (ROS) and Point Cloud Library (PCL ).

Segmentation of Furniture and Fixtures |

Segmentation of Furniture Surfaces |

L. C. Goron, Z. C. Marton, G. Lazea, M. Beetz, "Robustly Segmenting Cylindrical and Box-like Objects in Cluttered Scenes using Depth Cameras," Accepted for publication at the 7 th German Conference on Robotics (ROBOTIK), Munich, Germany, 2012.

N. Blodow, L. C. Goron, Z. C. Marton, D. Pangercic, T. Rühr, M. Tenorth, M. Beetz, "Autonomous Semantic Mapping for Robots Performing Everyday Manipulation Tasks in Kitchen Environments," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2011.

L. C. Goron, Z. C. Marton, G. Lazea, M. Beetz, "Automatic Layered 3D Reconstruction of Simplified Object Models for Grasping," Proceedings of the 41 st International Symposium on Robotics (ISR), Munich, Germany, 2010.

Object Recognition Based on Monovision

Object

recognition

in

different

types

of

images

is

one

of

the

most

important

tasks

of

computer

vision,

improving

landmarks

detection,

obstacles

detection,

map

building

or

path

planning

for

a

mobile

robot.

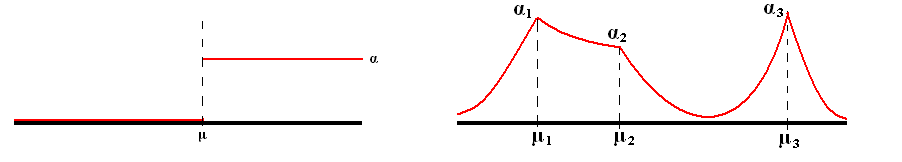

Left: Classical weighting mechanism. Right: The proposed weighting mechanism.

Using this approach, it is guaranted that the detected objects are more likely with the objects contained in the positive training set. Some results are presented below. Using the new algorithm (right), the number of false positive detections is reduced.

False positives for people detection. Left: Classical Adaboost algorithm results. Right: The proposed algorithm results.

Adaptive Appearance Based Loop-Closing in Heterogeneous Environments

This work addressed the problem of detecting loop-closure situations whenever an autonomous vehicle returns to previously visited places in the navigation area. An appearance-based perspective was considered by using images gathered by the on-board vision sensors for navigation tasks in heterogeneous environments characterized by the presence of buildings and urban furniture together with pedestrians and different types of vegetation. We proposed a novel probabilistic on-line weight updating algorithm for the bag-of-words description of the gathered images which takes into account both prior knowledge derived from an off-line learning stage and the accuracy of the decisions taken by the algorithm along time. An intuitive measure of the ability of a certain word to contribute to the detection of a correct loop-closure is presented. The proposed strategy was extensively tested using well-known datasets obtained from challenging large-scale environments.

| |

| Video with Results |

A. Majdik; D. Gálvez-López; G. Lazea; J.A. Castellanos, Adaptive Appearance Based Loop-Closing in Heterogeneous Environments, IEEE/RSJ International Conference on Intelligent Robots and Systems, pp.: 1256 – 1263, ISSN: 2153-0858, September, IROS 2011

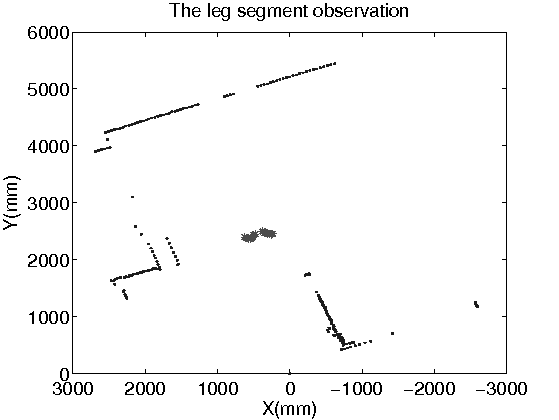

2D Laser Object Detection

The key role played by probability theory in the pattern recognition domain is demonstrated by the numerous applications from the real world, in which machines are searching for patterns in data, like recognizing handwritten digits, which needs very complex computation due to the wide variability of handwriting.

In the projects of our research group, the pattern recognition in case of the 2-dimensional laser data set is done by using the Gaussian Mixture theory.

The Gaussian model is called also as the normal distribution, and is often used for the distribution of continuous variables. The parameters of the mixture models are calculated by different algorithms like K-means and Expectation-Maximization. Although there are certain limitations applying this algorithm a Bayesian approach the framework of variational inference can be used.

In the figure above the office map of our research group

was mapped by a 2-dimensional laser sensor and the separate objects

were

clusterized with the afforementioned algorithms. All the separate

objects are

plotted with different colors.

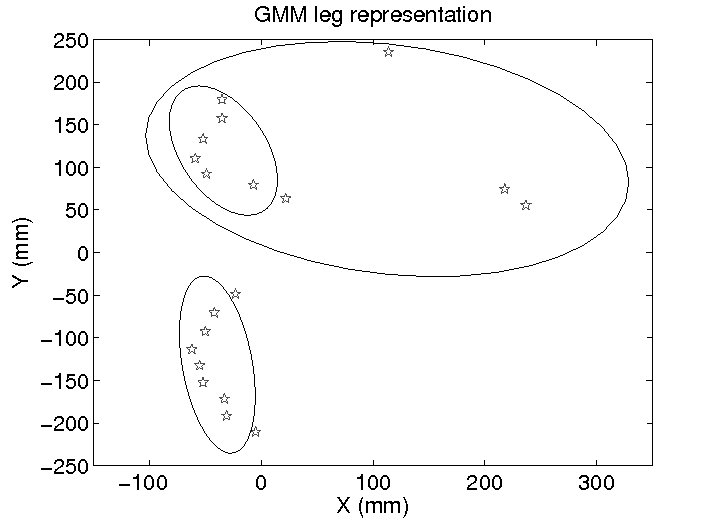

2D Laser Object

Classification

The

planar

laser

data

can

also

be

used

for

detection&classification

purposes.

This

procedure

involves

two

phases:

the

training

phase

(the

description

of

the

forms

via

GMMs)

and

the

evaluation

or the

classification phase(with Bayesian techniques). The next results were

prepared for detecting specific forms like, human leg pair in the

planar laser data.

|

|

|

| Fig. 1. GMM legpair |

Fig. 2. Classification results for GMM

legpairs |

Fig. 3. Video with results |

L.

Tamas,

M.

Popa,

Gh.

Lazea,

I.

Szoke,

A.

Majdik,

Laser and Vision

Based Object Detection for Mobile Robots, International Journal

of Mechanics and Control, Vol.: 11, No. 2, , 2010, pp.: 89-95, ISSN:

1590-8844

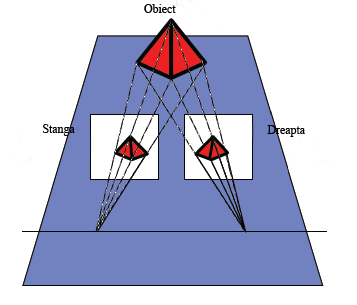

Object Detection based on Monovision

Without using any depth measurements given by the laser or

stereovision

sensors, object detection in structural environments is a difficult

task. Trying to detect objects using monovision, we assume that any

object located in the environment has a defined contour or some

non-uniforme texture. Therefore, the object detection problem using

monovision is reduced at the problem of edge extraction.

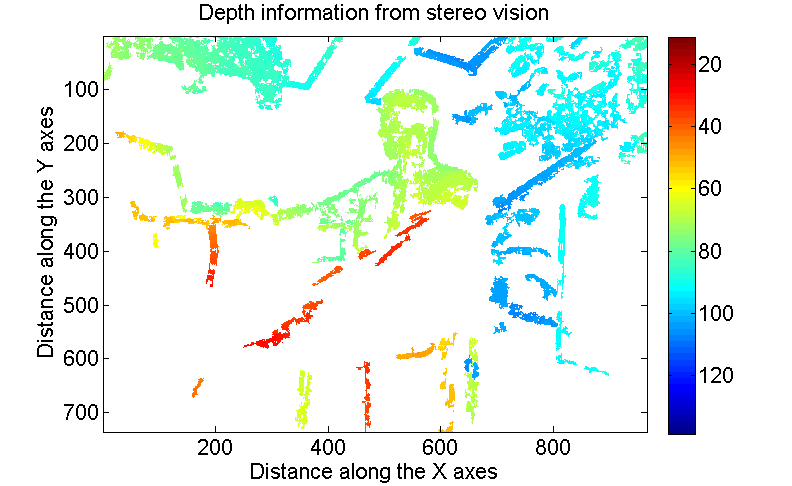

The functionality of a stereo camera is similar to the way that the human 3D perception: from two different viewpoints, the depth information from an object can be estimated.

|

|

|

| Fig. 1. Stereo camera working principle |

Fig. 2. Stereo image sample |

Fig. 3. Stereo image processing |

L. Tamas; A. Majdik; Gh. Lazea, Sensor Data Fusion Based Position Estimation Techniques in Mobile Robot Navigation, Proceedings of the European Control Conference, , 2009, pp.:4457-4462, ISBN 978-963-311-369-1

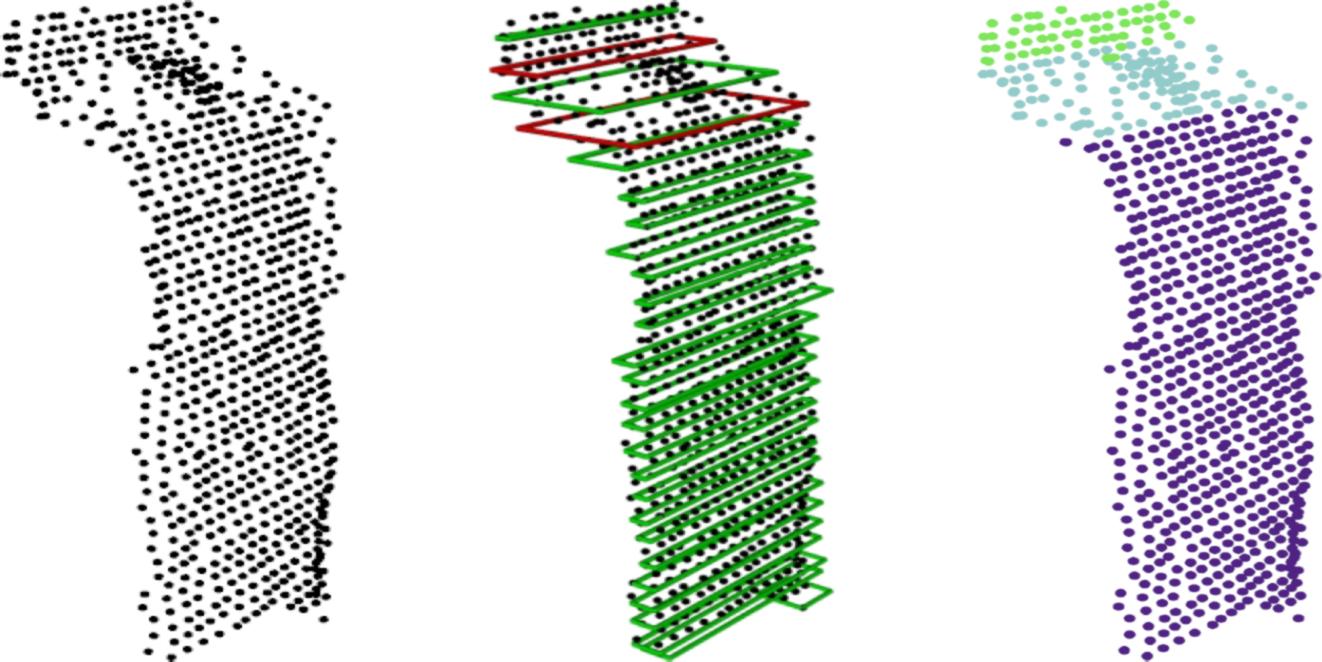

Another way for the 3D perception can be achieved with a custom planar laser. The mechanical extension proposed by our group for the usual planar laser enables us to retrieve 3D information from the surrounding environment with a precision less than 1cm.

L. C. Goron, L. Tamas, I. Reti, G. Lazea, 3D Laser Scanning System and 3D Segmentation of Urban Scenes, Proceedings of the 17th IEEE International Conference on Automation, Quality and Testing, Robotics, Romania, 2010.

Motion planning is a mandatory acquirement demanded of autonomous robots. Given the start and goal coordinates as the initial input, the robot has to plan its actions including collision-free movements. The probabilistic methods became very used in the last years due to the fact that are able to solve high dimensional problems in acceptable computation time. The most known techniques are the Probabilistic Roadmap Methods(PRM) and Rapidly-exploring Random Trees(RRT). In our case the latter one was used, from the initial map a tree is grown to crate the area of the free cells. These cells form the nodes of non-directed connectivity graph. Two nodes are connected if they belong to adjacent cells.

Probabilistic Cell Decomposition of the Office Map

The

cell

decomposition

is

done

until

all

the

cells

are

considered

possibly

collision-free

or

possibly

colliding

cells,

but

in

each

case

the

size

of

a

cell

cannot

be

smaller

than

an

imposed

minimum

threshold

value,

which

in

real

application

this

is

the

size

of

the

autonomous

vehicle.

The

office

map

is

separated

into

minimal

size

rectangles

having

area

of

0.25![]() ,

if

multiple

free

adjacent

cells

are

found

they

will

be

merged

into

cells

with

higher

area.

,

if

multiple

free

adjacent

cells

are

found

they

will

be

merged

into

cells

with

higher

area.

I. Szoke, A. Majdik, D. Lupea, L. Tamas, Gh. Lazea, Autonomous

Mapping

in

Polluted

Environments, Hunagrian Journal of

Industrial Chemistry, Veszprém, Hungary, 2010,

I. Szoke; L. Tamas; Gh. Lazea; M. Popa; A. Majdik, Path Planning

With Markovian Processes, 6th International Conference on

Informatics in Control, Automation and Robotics, Italy, 2009, pp.:

479-482, ISBN: 978-989-674-000-9

I. Szoke; L. Tamas; Gh. Lazea; M. Popa; A. Majdik, Path Planning

and Dynamic Objects Detection, 14th International Conference on

Advanced Robotics, Germany, 2009, pp. 1-6, ISBN: 978-1-4244-4855-5

|

|

|

| Fig. 1. Active beaking system on a

comercial car |

Fig. 2. Video with results |

Joint work of Paul Sucala, Rosu Cristian and Levente Tamas.

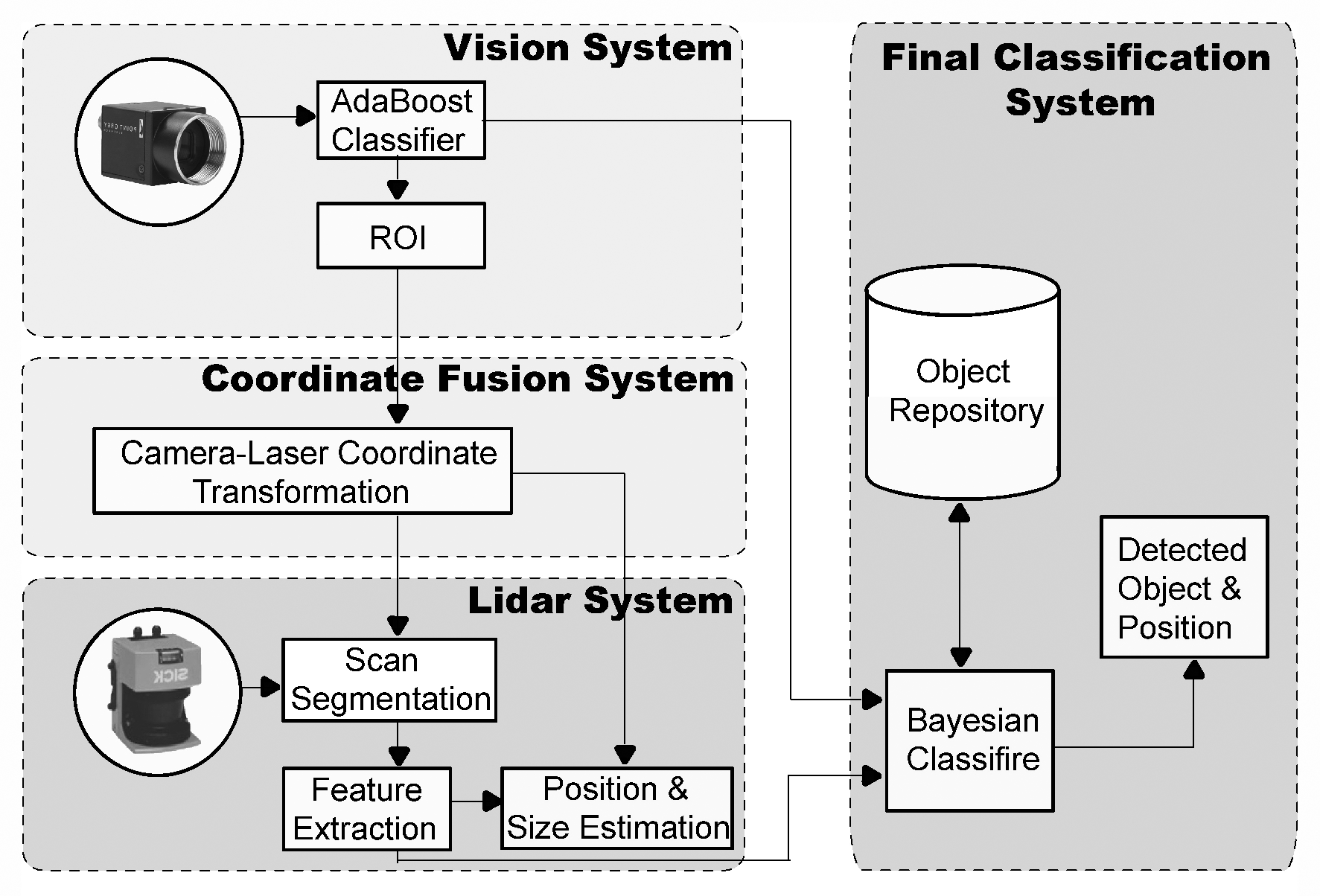

Laser and Vision Based

Object Tracking

The

purpose

of

this

experiment

was

to

validate

the

classification

and

tracking

algorithms

developed

in

earlier

phases

of

our

research

group.

The

first

picture

shows

the

general

architecture

of the system, while

next to it can be seen the results of the experiments on a video.

|

|

| Fig. 1. GMM legpari |

Fig. 2. Classification results for GMM

legpairs |

L. Tamas, M. Popa, Gh. Lazea, I. Szoke, A. Majdik, Lidar and Vision Based People Detection and Tracking, Journal of Control Engineering and Applied Informatics, Romania, Vol.: 12, No.: 2, Romania, 2010, pp.:30-35, ISSN 1454-8658

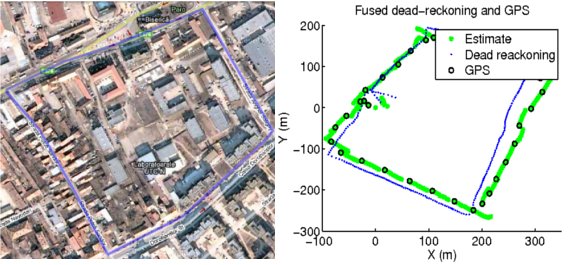

Fused Odometer & GPS Data Mapping

Outdoor map from GoogleEarth—custom map with custom odometer (composed from a high precision digital compass, and an adapted PS2 optical mouse sensor for a bike) used for mapping and GPS reference measurement.

|

|

| Fig. 1. Custom odometer |

Fig. 2. Fused odometer and GPS map |

L. Tamas: Sensor Fusion Based Position Estimation for Mobile Robots, PhD thesis, 2009.